–Arnav Kaman & Gauri Sidana

Introduction

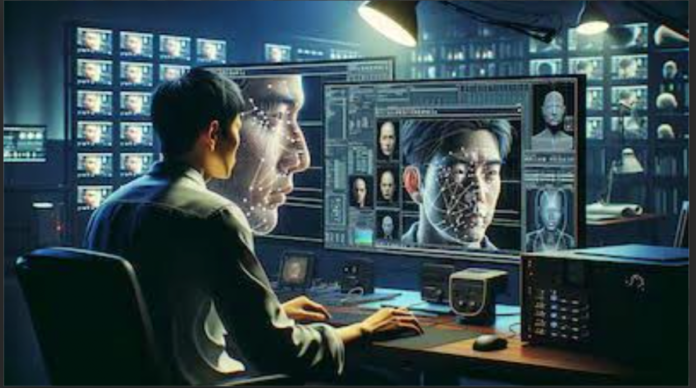

Before the start of the Paris AI summit, President Macron shared a video with deepfake AI generated versions of himself in popular movies and TV shows. An ill attempt at humour by the president wanting to bring attention back on to himself. One cannot help but feel the video is in poor taste. Considering that deepfakes are not only mere memes on the timeline, not only are they avenues for misinformation, but also a terrible tool to instill terror and cause injury in the personal lives of millions through the creation of Deepfake Non-Consensual Intimate Imagery (hereinafter ‘NCII’)

From Taylor Swift to the Italian Prime Minister to victims of sextortion and blackmail, the creation of deepfake NCII is a manifestation of sexual violence deeply affecting victims across the world, with little agreement for recourse across countries. Though victims and advocates have been crying from the rooftops for years now of the terror of deepfakes, only now the world is slowly waking up. The US Senate has unanimously passed the TAKE IT DOWN Act, drafted to criminalise publication of AI-generated NCII and requires social media platforms to have procedures in place to remove such content in 48 hours.

India, in the recent AI summit, presented itself to be a proponent of strong regulations for AI. As no stranger to such terrible offences, swift and strong action must be taken. In the blog below, the authors explore why the action should be criminalised, who should be punished, and how to implement such changes in our current systems.

Civil or Criminal

Though civil remedies may be pursued along with criminal cases, it is relevant to draw a distinction and delineate the threshold required for a wrong to be a criminal wrong, and why we believe deepfake NCII goes above and beyond that threshold.

Civil law addresses a wrong by providing compensation from the tortfeasor to the victim. Criminal law, on the other hand, addresses the action with not only punitive force against the wrongdoer, but also strengthens deterrence more heavily than civil law, and reinforces societal norms. The threshold between the two can be drawn from the extent of the social harms caused and norms violated.

While the enjoyment of erotic materials in private has been observed by the courts, to not be a crime per se, the creation of deepfake NCII is rarely a singular act. The usage of such technology is interconnected in a web of illegal acts. A report conducted by Europol shows how deep fake technology facilitated various criminal activities, including: “harassing or humiliating individuals online, perpetrating extortion and fraud, facilitating document fraud, falsifying online identities, fooling, and online child sexual exploitation.”

Even if one assumes the case that Deepfake NCII is created for personal ‘enjoyment’, NCII, by definition, is non-consensual, principally being an act that violates not only consent but also the fundamental Right to Privacy enshrined in Article 21. Indian Courts have upheld the publishing of NCII to be violative of fundamental rights in cases such as State of West Bengal v Boxi.

Criminal law enforces social stigma and societal norms to a far stricter degree, punishing even the attempt of such acts, unlike civil law, which depends on an evidentiary standard of harm. Finally, civil suits can be extremely expensive and time-consuming as it can become difficult to establish and prove liability for mere compensation. A stronger hand by criminalisation will lead to greater deterrence and more expedient justice.

Where the Liability Lies

There are three major actors in the commission of deepfake NCII. Any responsibility attached to any of these actors does not isolate the others. The first actors are the creators themselves. The second are sharers who disseminate such NCII. For the characterisation of these blogs, these sharers are seen in isolated silos from creators, who may also be sharers. The third is the intermediary body that allows for the circulation of such media.

Creators

While the creators of deepfake NCII are clearly the primary targets for punitive action, effectively identifying the ‘original creator’ presents significant challenges of efficacy and practicability. The ‘perk of anonymity’ makes identification near impossible and one might argue that the creator is oblivious to how the content might be used or shared, but this must not exempt them from liability. The growth of deepfake NCII related crimes and offences has been supported by markets involved in buying, selling and creating deepfake NCII. As held above, this market itself goes against the rights of privacy and consent of individuals. To challenge the terror of these individual offences, one must target the whole market to disrupt this deepfake economy.

Sharers

The disseminators, or non-creator consumers, of deepfake NCII are the most easily identifiable in the commission of the act. However, this group – in itself – is too heterogeneous to implicate equal liability. In cases of sharing material publicly, one might argue that not all sharers can be said to have the same level of knowledge about the content being deepfake, and they cannot be said to have the same level of intention for causing harm. Yet one finds it hard to imagine an instance where individuals post deepfake NCII without knowing the contents of their post, even if the disseminators do not have malicious intent. With irreversible harm being caused, intent must be considered irrelevant, allowing deepfake NCII to have a statutory liability for end users. To publish forged intimate images of individuals on social media is not a harmless activity and it must not be treated as such. Many nations, such as South Korea, have legislated on deepfake NCII to be a statutory offence and the authors concur with the position that end users must be held to a stringent standard of accountability.

Intermediaries

Intermediaries not only provide the platform for dissemination of Deepfake NCII, they are also in the best position for curative measures. But it is not an easy task to hold them accountable as victims often find it difficult to pursue action against these intermediaries for the removal of such content. Even after removal, there is nothing to ensure the content does not resurface again, repeating the trauma. This can largely be attributed to the difficulty posed in the filtering of content. Yet ironically enough in the field of AI, emerging technologies show us the way for easier detection of deepfake content. Furthermore, the Delhi HC has notified guidelines for intermediaries to take “reasonable effort” to ensure complete removal in cases of NCII. With legislation in place that criminalises deepfake NCII, intermediaries will be vigilant with removal as well as media assessment policies.

The Necessary Steps

Provisions of the BNS, while not explicitly designed for it, have been interpreted to encompass online gender based violence particularly with respect to Sections 75 and 77 laying down the offences of sexual harassment and voyeurism respectively. An affront to the reputation of the victims, a case of criminal defamation under Section 356 may also be made. These sections have been used to combat publication of NCII and can be interpreted to include instances of deepfake NCII. Sections 294 and 295 of the BNS deem the selling, distribution, and exhibition of obscene objects to be illegal, these sections being particularly relevant in cracking down on the markets of deepfakes. Along with the BNS, there are also provisions relevant in the IT Act, such as Sections 66E and 67A, and furthermore the rules guiding intermediary responsibilities can be found in the IT Rules, 2021.

Though current measures exist, the authors suggest that they are not enough considering their general character and inability to ensure intermediary liability. There must be an amendment in the current legislation or an entirely new criminal legislation, considering the evidentiary standards and targeted areas. The legislation or amendment must comprehensively, target the deepfake markets that support this enterprise, statutorily criminalise the dissemination of NCII and finally outline the responsibilities of intermediaries and the standards of removal procedures. Only through an extensive assessment addressing each stage of commission can we create a system that is victim centric with a strong grip of accountability.

Conclusion

In the recent Paris AI summit, a great number of panel discussions took place on the bright future of AI and humanity. How it will revolutionise medicine, administration and human life as a whole. The authors look forward to such a future, but in this blog, they also point out how this untamed growth of technology has run rampant and left great scars in people’s personal lives, threatening to never heal. AI deepfake technology may be beneficial in fields such as enhancing fashion shopping, and entertainment, but these benefits could never outweigh the great danger it has shown to possess and continues to do so. It is best remembered that a tight leash around technology’s growth will not hinder any good intentioned development but effectively weed out bad actors.

If India wishes to truly harness the potential of artificial intelligence for human development and common good, they must address the most immediate and extreme dangers presented by it, before reaping its benefits. The state must undertake a swift and comprehensive attack on the gross systems that support such markets, cooperate with emerging technologies in identification and categorisation, ensure that intermediaries play their part in comprehensive removal procedures and hold bad actors statutorily liable for an irreversible act that violates consent, personal autonomy and the freedom of individuals. Every individual deserves an open and safe internet, and to achieve that end, the authors believe that criminal law is the sword needed to eliminate the offence at its roots.

[ad_1]

Source link